Data Science Symposium 2023

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

This 8th Data Science Symposium is part of a series of symposia organized by AWI, GEOMAR and Hereon that was established in 2017. We invite you to join us for talks, demonstrations and lively discussions on the overarching topics of data, infrastructures, initiatives, and data science in the earth sciences.

This 8th Data Science Symposium is part of a series of symposia organized by AWI, GEOMAR and Hereon that was established in 2017. We invite you to join us for talks, demonstrations and lively discussions on the overarching topics of data, infrastructures, initiatives, and data science in the earth sciences.

At the DSS8, we'd like to specifically address the topics data aggregation across domains and scales and collaborative data science and workflows. We plan for an on-site meeting.

Registration and abstract submission are now open (see links below). For abstract submission you need to login in with your institute account (Click on "Single Sign-on / Shibboleth")

-

-

11:00

→

12:00

Program: Registration & Welcome 8A-001 - Foyer

-

12:00

→

13:00

Lunch 1 h 8A-001 - Foyer

-

13:00

→

13:15

Program: Session Introduction 8A-002 - Hörsaal Ostufer / Lecture Hall East

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

270Raum auf der Karte anzeigen -

13:15

→

15:00

Talks 8A-002 - Hörsaal Ostufer / Lecture Hall East

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

270Raum auf der Karte anzeigen-

13:15

Data-driven Attributing of Climate Events with a Climate Index Collection based on Model Data (CICMoD) 15m

Machine learning (ML) and in particular artificial neural networks (ANNs) push state-of-the-art solutions for many hard problems e.g., image classification, speech recognition or time series forecasting. In the domain of climate science, ANNs have good prospects to identify causally linked modes of climate variability as key to understand the climate system and to improve the predictive skills of forecast systems. To attribute climate events in a data-driven way with ANNs, we need sufficient training data, which is often limited for real world measurements. The data science community provides standard data sets for many applications. As a new data set, we introduce a collection of climate indices typically used to describe Earth System dynamics. This collection is consistent and comprehensive as we use control simulations from Earth System Models (ESMs) over 1,000 years to derive climate indices. The data set is provided as an open-source framework that can be extended and customized to individual needs. It allows to develop new ML methodologies and to compare results to existing methods and models as benchmark. Exemplary, we use the data set to predict rainfall in the African Sahel region and El Niño Southern Oscillation with various ML models. We argue that this new data set allows to thoroughly explore techniques from the domain of explainable artificial intelligence to have trustworthy models, that are accepted by domain scientists. Our aim is to build a bridge between the data science community and researchers and practitioners from the domain of climate science to jointly improve our understanding of the climate system.

Sprecher: Herr Marco Landt-Hayen (GEOMAR Helmholtz Centre for Ocean Research) -

13:30

Forecast system for urban air quality in the city of Hamburg, Germany 15m

Air quality in urban areas is an important topic not only for science but also for the concerned citizen as the air quality affects personal wellbeing and health. At Hereon in the project SMURBS (SMART URBAN SOLUTIONS) a model to forecast urban air quality at high spatial and timely resolution was developed (CityChem) with the aim to carry out exposure studies and future scenario calculations and provide current air quality information to the citizen at the same time. The daily model output is presented in the ‘Urban Air Quality Forecast’ Tool, which is based on an ESRI and ArcGIS application. The tool helps citizens and decision makers to investigate forecasted 24 hour pollutant data for 7 pollutants including NO₂, NO, O₃, SO₂, CO, PM10, PM25 along with the AQI Index. The model output is generated in the form of network Common Data Form (netCDF) files which are brought into the GIS environment using ArcGIS API for JavaScript. The netCDF files are converted into multidimensional rasters to display air pollutants information over a 24-hour period. These rasters are then shared as web services over Hereon’s Geohub Portal from where they are consumed to be visualized over the Urban Air Quality Forecast Tool. The data on the tool is updated daily via cron jobs which run the complete implemented workflow of fetching the newly generated model outputs and updating shared web services. This tool offers many different opportunities to investigate the current air quality in Hamburg.

Sprecher: Rehan Chaudhary -

13:45

Rapid segmentation and measurement of an abundant mudsnail: Applying superpixels to scale accurate detection of growth response to ocean warming 15m

Advances in computer vision are applicable across aquatic ecology to detect objects from images, ranging from plankton and marine snow to whales. Analyzing digital images enables quantification of fundamental properties (e.g., species identity, abundance, size, and traits) for richer ecological interpretation. Yet less attention has been given to imaging fauna from coastal sediments, despite these ecosystems harboring a substantial fraction of global biodiversity and whose members are critical for nitrification, cycling carbon, and filtering water pollutants. In this work, we apply a superpixel segmentation approach— grouped representations of underlying colors and other basic features— to automatically extract and measure abundant hydrobiid snails from images and assess size-distribution responses to experimental warming treatments. Estimated length measurements were assessed against >4500 manually measured individuals, revealing high accuracy and precision across samples. This method expedited hydrobiid size measurements tremendously, tallying >40k individuals, reducing the need for manual measurements, limiting human measurement bias, generating reproducible data products that could be inspected for segment quality, and revealed species growth response to ocean warming.

Sprecher: Liam MacNeil (GEOMAR EV) -

14:00

Tracing Extended Internal Stratigraphy in Ice Sheets using Computer Vision Approaches. 15m

Polar ice sheets Greenland and Antarctica play a crucial role in the Earth's climate system. Accurately determining their past accumulation rates and understanding their dynamics is essential for predicting future sea level changes. Ice englacial stratigraphy, which assigns ages to radar reflections based on ice core samples, is one of the primary methods used to investigate these characteristics. Moreover, modern ice sheet models use paleoclimate forcings which are derived from ice cores and stratigraphy contribute to improvement and tuning of such models.

However, the conventional semi-automatic process for mapping internal reflection horizons is prone to shortcomings in terms of continuity and layer geometry, and can be highly time-consuming. Furthermore, the abundance of unmapped radar profiles, particularly from the Antarctic ice sheet, underscores the need for more efficient and comprehensive methods.

To address this, machine learning techniques have been employed to automatically detect internal layer structures in radar surveys. Such approaches are well-suited to datasets with different radar properties, making them applicable to ice, firn, and snow data. In this study, a combination of classical computer vision methods and deep learning techniques, including Convolutional Neural Networks (CNN), were used to map internal reflection horizons.

The study's methodology, including the specific pre-processing methods, labeling technique, and CNN architecture and hyperparameters, is described. Results from more promising CNN architectures, such as U-net, are presented and compared to those from image processing methods. Challenges, including incomplete training data, unknown numbers of internal reflection horizons in a profile, and a large number of features in a single radargram, are also discussed, along with potential solutions.

Sprecher: Hameed Moqadam (Alfred Wegener Institute) -

14:15

LightEQ: On-Device Earthquake Detection with Embedded Machine Learning 15m

The detection of earthquakes in seismological time series is central to observational seismology. Generally, seismic sensors passively record data and transmit it to the cloud or edge for integration, storage, and processing. However, transmitting raw data through the network is not an option for sensors deployed in harsh environments like underwater, underground, or in rural areas with limited connectivity. This paper introduces an efficient data processing pipeline and a set of lightweight deep-learning models for seismic event detection deployable on tiny devices such as microcontrollers. We conduct an extensive hyperparameter search and devise three lightweight models. We evaluate our models using the Stanford Earthquake Dataset and compare them with a basic STA/LTA detection algorithm and the state-of-the-art machine learning models, i.e., CRED, EQtransformer, and LCANet. For example, our smallest model consumes 193 kB of RAM and has an F1 score of 0.99 with just 29k parameters. Compared to CRED, which has an F1 score of 0.98 and 293k parameters, we reduce the number of parameters by a factor of 10. Deployed on Cortex M4 microcontrollers, the smallest version of \project-NN has an inference time of 932 ms for 1 minute of raw data, an energy consumption of 5.86 mJ, and a flash requirement of 593 kB. Our results show that resource-efficient, on-device machine learning for seismological time series data is feasible and enables new approaches to seismic monitoring and early warning applications.

Next step:

We can have a more in-depth analysis of seismic data such as detection of P and S waves on the sensors.*This paper is already accepted and peer-reviewed *

Sprecher: Tayyaba Zainab -

14:30

Recent Highlights from Helmholtz AI Consulting in Earth & Environment 15m

As Helmholtz AI consultants we support researchers in their machine learning and data science projects. Since our group was founded in 2020, we have completed over 40 projects in various subfields of Earth and Environmental sciences, and we have established best practices and standard workflows. We cover the full data science cycle from data handling to model development, tuning, and roll-out. In our presentation, we show recent highlights to give an impression of how we support researchers in the successful realisation of their machine learning projects and help you to make the most of your scientific data.

In particular, we will present our contributions to:

* Detecting sinkhole formation near the Dead Sea using computer vision techniques (Voucher by D. Al-Halbouni, GEOMAR)

* Integrating trained machine learning algorithms into Earth system models (Voucher by S. Sharma, HEREON)

* Encoding ocean model output data for scientific data sonification (Voucher by N. Sokolova, AWI)

* Operational machine learning: automated global ocean wind speed predictions from remote sensing data

Our services as Helmholtz AI consultants are available to all Helmholtz researchers free of charge. We offer support through so-called vouchers, which are up to six-month long projects with clearly defined contributions and goals. Beyond individual support, computational resources and training courses are available through Helmholtz AI. If you would like to get in touch, please contact us in person during the symposium, via consultant-helmholtz.ai@dkrz.de, or at https://www.helmholtz.ai/themenmenue/you-helmholtz-ai/ai-consulting/index.html.Sprecher: Caroline Arnold (Deutsches Klimarechenzentrum) -

14:45

The NFDI4Earth Academy – Your training network to bridge Earth System and Data Science 15m

The NFDI4Earth Academy is a network of early career – doctoral and postdoctoral - scientists interested in bridging Earth System and Data Sciences beyond institutional borders. The research networks Geo.X, Geoverbund ABC/J, and DAM offer an open science and learning environment covering specialized training courses and collaborations within the NFDI4Earth consortium with access to all NFDI4Earth innovations and services. Academy fellows advance their research projects by exploring and integrating new methods and connecting with like-minded scientists in an agile, bottom-up, and peer-mentored community. We support young scientists in developing skills and mindset for open and data-driven science across disciplinary boundaries.

The first cohort of fellows has successfully completed their first six months within the Academy and is looking forward to a number of exciting events this year, such as a Summer School, a Hackathon and a Think Tank event. Furthermore, this autumn the second call for fellows will be released.Sprecher: Frau Effi-Laura Drews (Forschungszentrum Jülich; Geoverbund ABC/J)

-

13:15

-

15:00

→

15:30

Coffee 30m 8A-001 - Foyer

-

15:30

→

16:15

Talks: Keynote - Prof. Dr. Kevin Köser (CAU Kiel) 8A-002 - Hörsaal Ostufer / Lecture Hall East

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

270Raum auf der Karte anzeigen -

16:15

→

16:30

Program: Group picture 8A-002 - Hörsaal Ostufer / Lecture Hall East

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

270Raum auf der Karte anzeigen -

16:30

→

18:30

Poster session 8A-001 - Foyer

-

18:30

→

20:30

Dinner 2h 8A-001 - Foyer

-

11:00

→

12:00

-

-

08:00

→

08:45

Program: Registration 8A-001 - Foyer

-

08:45

→

10:30

Talks 8A-002 - Hörsaal Ostufer / Lecture Hall East

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

270Raum auf der Karte anzeigen-

08:45

The project “underway”-data and the MareHub initiative: A basis for joint marine data management and infrastructure 15m

Coordinated by the German Marine Research Alliance (Deutsche Allianz Meeresforschung (DAM)) the project “Underway”–Data is supported by the marine science centers AWI, GEOMAR and Hereon of the Helmholtz Association research field “Earth and Environment”. AWI, GEOMAR and Hereon develop the marine data hub (Marehub). This MareHub initiative is a contribution to the DAM. It builds a decentralized data infrastructure for processing, long-term archiving and dissemination of marine observation and model data and data products. The “Underway”-Data project aims to improve and standardize the systematic data collection and data evaluation for expeditions with German research vessels. It supports scientists with their data management duties and fosters (data)science through FAIR and open access to marine research data. Together, the project “Underway”-Data and the MareHub initiative form the base of a coordinated data management infrastructure to support German marine sciences and international cooperation. The data and infrastructure of these joint efforts contribute to the NFDI and EOSC.

This talk will present the above mentioned connections between the different initiatives and give further detail about the “underway”-data project.Sprecher: Gauvain Wiemer (Deutsche Allianz Meeresforschung) -

09:00

The MareHub initiative: A basis for joint marine data infrastructures for Earth System Sciences 15m

The MareHub is a cooperation to bundle the data infrastructure activities of the marine Helmholtz centers AWI, GEOMAR and Hereon as a contribution to the German Marine Research Alliance and its complementary project “Underway”-Data.

The MareHub is part of the DataHub, a common initiative of all centers of the Helmholtz Association in the research field Earth and Environment. The overarching goal is to build a sustainable distributed data and information infrastructure for Earth System Sciences.

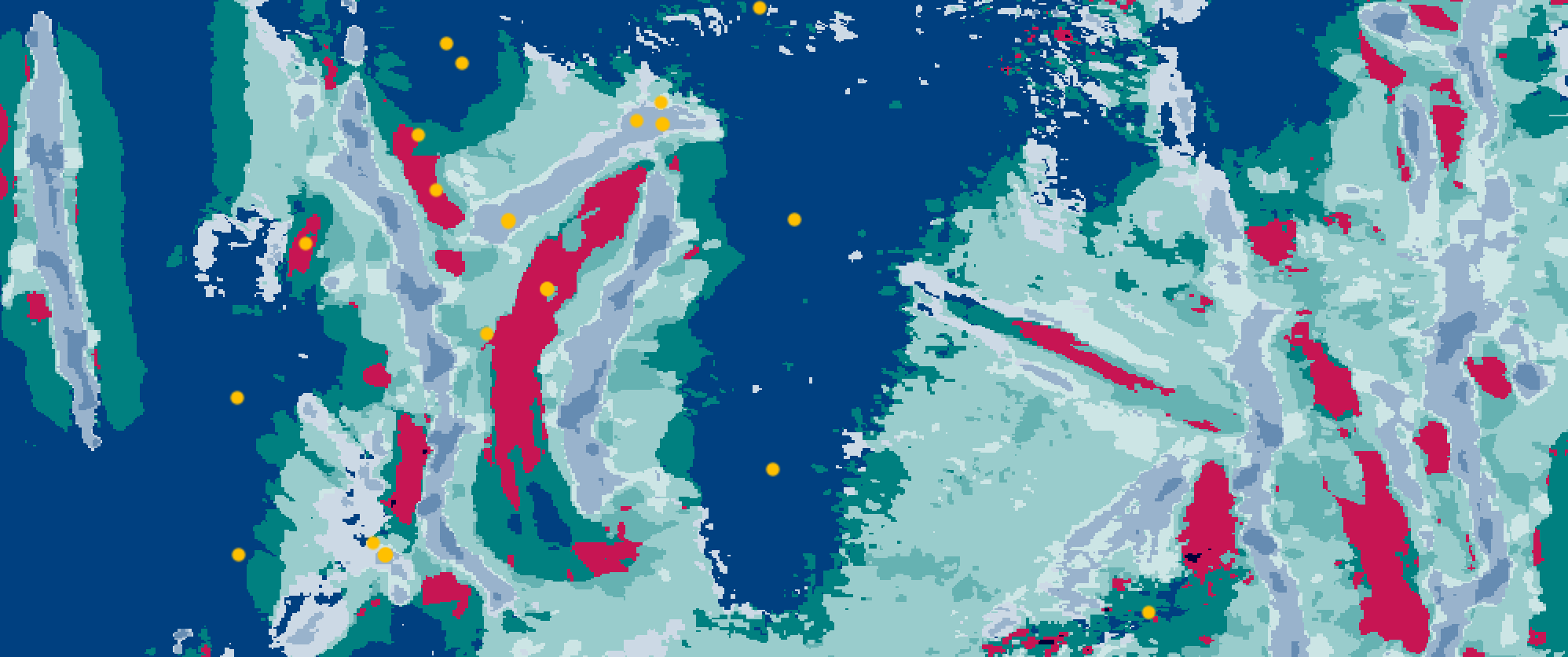

Common topics of the MareHub include the provision of standardized interfaces and SOPs, as well as solutions for the long-term storage and dissemination of marine observations, model data, and data products. Besides providing central and easy findability and accessibility of marine research data in the portal marine-data.de, the MareHub works on the aggregability and visualization of specific as well as standardized and curated data collections. Visualisation takes place in the form of thematic data viewers with content from oceanographic and seabed observation variables, video and image data, hydro acoustic data, bathymetric seabed maps, remote sensing data and sample data. These thematic viewers are developed on a sustainable generic or project basis.

In this context, standardized machine-readable data services and web map services will be established to enable the dissemination of marine data in international networks and to support advanced data science in the scientific community.

Sprecher: Angela Schäfer (Alfred Wegener Institute - Helmholtz Centre for Polar and Marine Research) -

09:15

Automation of Workflows for Numerical Simulation Data and Metadata 15m

Recording the workflow of numerical simulations and their postprocessing is an important component of quality management as it, e.g., makes the CFD result files reproducible. It has been implemented in an existing workflow in coastal engineering and has proven its worth in practical application.

The data history recording is achieved by saving the following information as character variables together with the actual results in CF-compliant NetCDF files:

• content of the steering files

• parameterization of simulation and postprocessing

• name of initial and boundary datasets

• name and version of the used simulation and analysis programs

Each program inherits this information from its predecessor, adds its own information, e.g., its steering file, and writes both old and new information in its result file. As a consequence, a researcher can, by downloading just the result file of the last program in the processing chain, access the recorded metadata of the whole chain.In order to make use of these data, a second automated workflow has been established to archive the results and store the metadata in a Metadata Information System (https://datenrepository.baw.de). A Java-based postprocessor has been extended to evaluate the above mentioned NetCDF files and convert the information into ISO compliant files in XML format. The postprocessor starts copying the numerical results into a long-term storage via a REST interface and, in case of a successful copy, imports the XML metadata into the Metadata Information System (MIS) via a CSW-T interface. The MIS offers interactive search and download links to the long-term storage.

Both workflows follow the FAIR principles, e.g., by making numerical results and their metadata available to the public. The automation eliminates the error-prone manual editing and processing, relieves the user and assures quality.

Sprecher: Herr Peter Schade (Bundesanstalt für Wasserbau) -

09:30

Streamlining Remote Sensing Data into Science-Ready Datacubes with rasdaman 15m

Remote sensing data is generally distributed as individual scenes, often with several variations based on the level of preprocessing applied. While there is generally good support for filtering these scenes by metadata, such as intersecting areas of interest or acquisition time, users are responsible for any additional filtering, processing, and analytics. This requires users to be experienced in provisioning hardware and deploying various tools and programming languages to work with the data. This has led to a trend towards services that consolidate remote sensing data into analysis-ready datacubes (ARD). Users can do analytics on this data through the service directly on the server and receive the precise results they are interested in.

The rasdaman Array Database is one of the most comprehensive solutions for ARD service development. It is a classical DBMS specializing in the management and analysis of multidimensional array data, such as remote sensing data. On top of its domain-agnostic array data model, rasdaman implements support for the OGC/ISO coverage model on regularly gridded data, also known as geo-referenced datacubes. It offers access to the managed datacubes via standardized and open OGC APIs for coverage download (Web Coverage Service or WCS), processing (Web Coverage Processing Service or WCPS), and map portrayal (Web Map Service or WMS, and Web Map Tile Service or WMTS). Developing higher-level spatio-temporal services using these APIs is similar to developing Web applications with an SQL database.

In this talk, we discuss the current status of rasdaman and share our experiences in building ARD services across a wide range of scales, from nanosats in orbit with less computing power than modern smartphones to data centers and global federations.

Sprecher: Dr. Dimitar Mishev (Constructor University) -

09:45

DS2STAC: A Python package for harvesting and ingesting (meta)data into STAC-based catalog infrastructures 15m

Despite the vast growth in accessible data from environmental sciences over the last decades, it remains difficult to make this data openly available according to the FAIR principles. A crucial requirement for this is the provision of metadata through standard catalog interfaces or data portals for indexing, searching, and exploring the stored data.

With the release of the community-driven Spatio-Temporal Assets Catalog (STAC), this process has been substantially simplified as STAC is based on highly flexible and lightweight GeoJSONs instead of large XML-files. The number of STAC-users has hence rapidly increased and STAC now features a comprehensive ecosystem with numerous extensions. This is also why we have chosen STAC as our central catalog framework in our research project Cat4KIT, in which we develop an open-source software stack for the FAIRification of environmental research data.

A central element of this project is the automatic (meta)data and service harvesting from different data servers, providers and services. This so-called DS2STAC-module hence contains tailormade harvesters for different data sources and services, a metadata validator and a database for storing the STAC items, collections and catalogs. Currently, DS2STAC can be used for harvesting from THREDDS-Server, Intake-Catalogs, and SensorThings APIs. In all three cases, it creates and manages consistent STAC-items, -catalogs and -collections which are then made openly available through the pgSTAC-database and the STAC-FastAPI to allow for a user-friendly interaction with our environmental research data.

In this presentation, we want to demonstrate DS2STAC and also show its functionalities within our Cat4KIT-framework. We also want to discuss further use-cases and scenarios and hence propose DS2STAC as a modular tool for harvesting (meta)data into STAC-based catalog infrastructures.

Sprecher: Mostafa Hadizadeh (Karlsruher Institut für Technologie - Institut für Meteorologie und Klimaforschung Atmosphärische Umweltforschung (IMK-IFU), KIT-Campus Alpin) -

10:00

The Renewed Marine Data Infrastructure Germany (MDI-DE) 15m

The processes of search, access and evaluation of geospatial datasets is often a challenging task for researches, managers, administrations and interested users due to highly distributed data sources.

To provide a single access point to governmentally provided datasets for marine applications, the project “Marine Spatial Data Infrastructure Germany” (MDI-DE) was launched in 2013. Its geoportal has been completely renewed on open-source software to follow the approaches of the FAIR-principles and compliance to international standards regarding web services and metadata.The MDI project is driven by a cooperation of 3 federal and 3 regional authorities, which represent the coastal federal states of Germany. Each of the signatory authorities operates a proprietary spatial data infrastructure based on different technical components to publish web services of, among others, the marine domain. So, one core component of the MDI-DE is a metadata search interface across all participants’ SDI’s. The provided marine-related services are regularly harvested into the own MDIs’ metadata catalogue using OGC CSW-services. The interface enables users to find datasets using keywords and filters. Besides of formal and legal information, it provides the possibility to visualise the services and to download the underlying datasets.

Second, a web mapping application is part of MDI-DE. Here, a large number of categorised view services, originating from the consortium and from external institutions, is available. Moreover, the portal enables any user to import own geodata from disc or from OGC-conformant web services. Further, a map server is deployed within the MSDI to publish harmonised WMS and WFS services based on distributed data sources.

MDI-DE and the German Marine Research Alliance (DAM) plan to intensify their cooperation and to promote their respective public visibility by referencing each other’s portals, integrate their metadata catalogues and jointly develop new products.Sprecher: Henning Gerstmann (Bundesamt für Naturschutz) -

10:15

OSIS: From Past to Present and Future 15m

The Ocean Science Information System (OSIS) was first established in 2008 at GEOMAR as a metadata-centric platform for ongoing marine research collaborations. It has become a vital platform for collecting, storing, managing and distributing ocean science (meta)data. We will discuss how OSIS has enabled multidisciplinary ocean research and facilitated collaborations among scientists from different fields.

Furthermore, we will present the major refactoring that is currently underway to move OSIS to a new microservice architecture. This architecture will not only update the OSIS website but will also expose all of the information stored in the OSIS database as a RESTful API webservice. This move will make it easier for researchers and other stakeholders to access and use the data, promoting transparency, and open data practices.

Additionally, we will discuss how OSIS will continue to evolve to meet the changing needs of ocean research and the DataHub community in the future.

Join us for this exciting event to learn more about the history and future of OSIS and how the move to microservices and APIs will enhance the platform’s capabilities and impact, and how it will continue to add scientific visibility and reusability to your research's data output.

Sprecher: FDI Digital Research Services (GEOMAR)

-

08:45

-

10:30

→

11:00

Coffee 30m 8A-001 - Foyer

-

11:00

→

12:45

Talks 8A-002 - Hörsaal Ostufer / Lecture Hall East

8A-002 - Hörsaal Ostufer / Lecture Hall East

GEOMAR - Standort Ostufer / GEOMAR - East Shore

270Raum auf der Karte anzeigen-

11:00

A progress report on OPUS - The Open Portal to Underwater Soundscapes to explore global ocean soundscapes 15m

In an era of rapid anthropogenically induced changes in the world’s oceans, ocean sound is considered an essential ocean variable (EOV) for understanding and monitoring long-term trends in anthropogenic sound and its effects on marine life and ecosystem health.

The International Quiet Ocean Experiment (IQOE) has identified the need to monitor the distribution of ocean sound in space and time, e.g. current levels of anthropogenic sound in the ocean.

The OPUS (Open Portal to Underwater Soundscapes) data portal, is currently being developed at the Alfred Wegener Institute Helmholtz Centre for Polar and Marine Research (AWI) in Bremerhaven, Germany, in the framework of the German Marine Research Alliance (DAM) DataHUB/MareHUB initiative.

OPUS is designed as an expeditious discovery tool for archived passive acoustic data, envisioned to promote the use of archived acoustic data collected worldwide, thereby contributing to an improved understanding of the world’s oceans soundscapes and anthropogenic impacts thereon over various temporal and spatial scales. To motivate data provision and use, OPUS adopts the FAIR principles for submitted data while assigning a most permissive CC-BY 4.0 license to all OPUS products (visualizations of and lossy compressed audio data). OPUS provides direct access to underwater recordings collected world-wide, allowing a broad range of stakeholders (i.e., the public, artists, journalists, fellow scientists, regulatory agencies, consulting companies and the marine industry) to learn about and access this data for their respective needs. With data becoming openly accessible, the public and marine stakeholders will be able to easily compare soundscapes from different regions, seasons and environments, with and without anthropogenic contributions.

Here, an update on achieved and upcoming milestones and developments of OPUS will be presented, together with ongoing and future case studies and use cases of the portal for different user groups.Sprecher: Karolin Thomisch (Alfred-Wegener-Institut Helmholtz-Zentrum für Polar- und Meeresforschung) -

11:15

The Coastal Pollution Toolbox: A collaborative workflow to provide scientific data and information on marine and coastal pollution 15m

Interaction between pollution, climate change, the environment, and people is complex. This complex interplay is particularly relevant in coastal regions, where the land meets the sea. It challenges the scientific community to find new ways of transferring usable information for action into actionable knowledge and into used information for managing the impact of marine pollution. Therefore, a better understanding is necessary of the tools and approaches to facilitate user engagement in co-designing marine pollution research in support of sustainable development.

This presentation highlights a collaborative workflow to provide scientific data and information on marine and coastal pollution. The Coastal Pollution Toolbox provides innovative tools and approaches by marine pollution science to the scientific community, the public and agencies and representatives from policymaking and regulation. The framework provides a suite of scientific communication, science and synthesis, and management products. Essential components for the provision of the tools are the underlying data infrastructures, including data management technologies and workflows.

The presentation concludes by establishing the scope for a ‘last mile’ approach supporting engagement and co-designing by incorporating scientific evidence of pollution into decision-making.

Keywords: Marine pollution, participatory approaches, co-design, engagement, tools, and approaches, HCDC infrastructure

Sprecher: Marcus Lange (Helmholtz-Zentrum Hereon) -

11:30

The Earth Data Portal for Finding and Exploring Research Content 15m

Digitization and the Internet in particular have created new ways to find, re-use, and process scientific research data. Many scientists and research centers want to make their data openly available, but often the data is still not easy to find because it is distributed across different infrastructures. In addition, the rights of use, citability and data access are sometimes unclear.

The Earth Data Portal aims to provide a single point of entry for discovery and re-use of scientific research data in compliance with the FAIR principles. The portal aggregates data of the earth and environment research area from various providers and improves its findability. As part of the German Marine Research Alliance and the Helmholtz-funded DataHub project, leading German research centers are working on joint data management concepts, including the data portal.

The portal offers a modern web interface with a full-text search, facets and explorative visualization tools. Seamless integration into the Observation to Analysis and Archives Framework (O2A) developed by the Alfred Wegener Institute also enables automated data flows from data collection to publication in the PANGAEA data repository and visibility in the portal.

Another important part of the project is a comprehensive framework for data visualization, which brings user-customizable map viewers into the portal. Pre-curated viewers currently enable the visualization and exploration of data products from maritime research. A login feature empowers users to create their own viewers including OGC services-based data products from different sources.

In the development of the portal, we use state of the art web technologies to offer user-friendly and high-performance tools for scientists. Regular demonstrations, feedback loops and usability workshops ensure implementation with added value.

Sprecher: Robin Heß (Alfred-Wegener-Institut) -

12:00

Beyond ESGF - Regional climate model datasets in the cloud on AWS S3 15m

The Earth System Grid Federation (ESGF) data nodes are usually the first address for accessing climate model datasets from WCRP-CMIP activities. It is currently hosting different datasets in several projects, e.g., CMIP6, CORDEX, Input4MIPs or Obs4MIPs. Datasets are usually hosted on different data nodes all over the world while data access is managed by any of the ESGF web portals through a web based GUI or the ESGF Search RESTful API. The ESGF data nodes provide different access methods, e.g., https, opendap or globus.

Beyond ESGF, there has been the Pangeo / ESGF Cloud Data Working Group that coordinates efforts related to storing and cataloging CMIP data in the cloud, e.g., in the Google cloud and in the Amazon Web Services Simple Storage Service (S3) where a large part of the WCRP-CMIP6 ensemble of global climate simulations is now available in analysis-ready cloud-optimized (ARCO) zarr format. The availibility in the cloud has signifcantly lowered the barrier for users with limited resources and no access to an HPC environment to work with CMIP6 datasets and at the same time increases the chance for reproducibilty and reusability of scientific results.

We are now in the process of adapting the Pangeo strategy for publishing also regional climate model datasets from the CORDEX initiative on AWS S3 cloud storage. Thanks to similar data formats and meta data conventions in comparison to the global CMIP6 datasets, the workflows require only minor adaptations. In this talk, we will show the strategy and workflow implemented in python and orchestrated in github actions workflows as well as a demonstration of how to access CORDEX datasets in the cloud.Sprecher: Lars Buntemeyer (Helmholtz-Zentrum Hereon) -

12:15

Data collaboration without borders: bringing together people, data, and compute in the ZMT datalab 15m

Creating data collaborations that are both effective and equitable is challenging. While there are many technical challenges to overcome, there are also significant cultural issues to deal with, for example, communication styles, privacy protection and compliance to institutional and legal norms. At ZMT Bremen, we had to address the central question of “How to build a good data collaboration with our tropical partners?” while also dealing with the restrictions of the pandemic, which laid bare the digital divides that separate us. In 2020, the ZMT datalab (www.zmt-datalab.de) was born out of the search for an answer. The datalab is a data platform that brings together people, data and compute resources, with a strategy to not just share data but to share work, and without borders. Here we present the design principles and considerations to navigate the conflicting incentives that go towards building a datalab that is both open and effective.

Sprecher: Arjun Chennu (arjun.chennu@leibniz-zmt.de) -

12:30

webODV - Interactive online data visualization and analysis 15m

webODV is the online version of the worldwide used Ocean Data View

Software (https://odv.awi.de). During the recent years webODV

(https://webodv.awi.de) has been evolved into a powerful and

distributed cloud system for the easy online access of large data

collections and collaborative research. Up to know more than 1000

users per months (worldwide) are visiting our webODV instances at- GEOTRACES: https://geotraces.webodv.awi.de

- EMODnet Chemistry: https://emodnet-chemistry.webodv.awi.de

- Explore: https://explore.webodv.awi.de

- MOSAiC: https://mvre.webodv.cloud.awi.de

- HIFIS: https://hifis.webodv.cloud.awi.de

- EGI-ACE: https://webodv-egi-ace.cloud.ba.infn.it

webODV facilitates the generation of maps, surface plots, section

plots, scatter plots, and much more for the creation of publication

ready visualizations. Rich documentation is available

(https://mosaic-vre.org/docs), as well as video tutorials

(https://mosaic-vre.org/videos/webodv). webODV online analyses can be

shared via links and are fully reproducible. Users can download

so-called "xview" files, small XML files, that include all

instructions for webODV to reproduce the analyses. These files can be

shared with colleagues or attached to publications, supporting fully

reproducible open science.Sprecher: Sebastian Mieruch (AWI)

-

11:00

-

12:45

→

13:30

Lunch 45m 8A-001 - Foyer

-

13:30

→

15:00

Program: Workshops / Interactive Sessions

-

08:00

→

08:45